by Balaswamy Kaladi

In today's world of big data, analytics, and data warehousing, data volumes continue to grow, seemingly only matched by businesses appetite to exploit data for commercial advantage, and do so ever more quickly.

Historically, to deal with the kind of large data volumes that are now becoming commonplace, expensive and heavyweight technologies from vendors such as Teradata, Netezza and Vertica were needed. These typically cost millions of dollars, and required hardware, software, and consulting.

As Amazon Web Services (AWS) has changed forever how IT infrastructure can be delivered on-demand, scalable, quickly and cost effectively Amazon Redshift is doing the same for data warehousing and big data analytics. It offers a massively parallel columnar data store that can deal with billions of rows of data, yet which can be up and running in a few minutes, and can be operated for a few cents to dollars for an hour.

Let's start with the technology options available for building a data warehouse followed by the benefits of Amazon Redshift.

Basically, there are two options available for building a data warehouse

- Row-wise storage oriented databases

- Column-wise storage oriented databases

Row-wise storage oriented databases

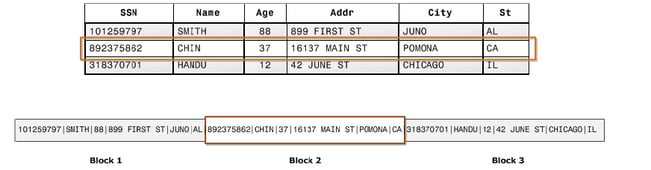

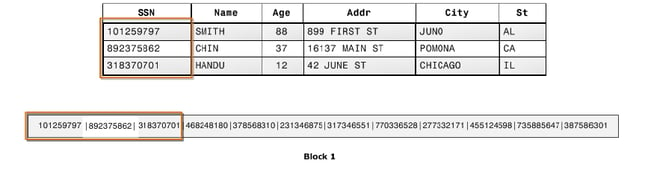

In row-wise database storage, data blocks store values sequentially for each consecutive column making up the entire row. If block size is smaller than the size of a record, storage for an entire record may take more than one block. If block size is larger than the size of a record, storage for an entire record may take less than one block, resulting in an inefficient use of disk space.

Secondly, in a row-wise database storage, every query has to read through all of the columns for all of the rows in the blocks that satisfy the query columns, including columns you didn't choose. This approach creates a significant performance bottleneck in data warehouses, where your tables have more columns, but your queries use only a few.

Thirdly, to optimize the performance of a row-wise storage based system used as a data warehouse, we have to use a number of techniques, including materialized views, creating pre-aggregated rollup tables, building indexes on every possible predicate combination, implementing data partitioning to leverage partition pruning by query optimizer, and performing index based joins.

Databases such as Oracle Database Server (version 11 and earlier), Microsoft SQL Server, MySQL, and PostgreSQL are row-wise storage oriented database systems. These systems have been traditionally used for data warehousing, but they are better suited for transactional processing (OLTP) than for analytics.

Column-wise storage oriented databases

In column-wise database storage, each data block stores values of a single column for multiple rows instead of packing the whole rows into a block. This functionality allows us to be more I/O efficient for read-only queries because they only have to read those columns accessed by a query from disk (or from memory).

After faster I/O, the next biggest benefit to using a column-oriented database is improved compression. Because every column is packed into its own set of blocks, every physical block contains the same data type. When all the data is the same data type, the database can use extremely efficient compression algorithms. As a result, you need less storage compared to a row-oriented database. This approach also results in significantly lesser I/O because the same data is stored in fewer blocks.

Let's take an example, suppose a table contains 100 columns. A query is written to fetch only 5 columns. In column-wise storage databases, the query reads only 5 percent of the data contained in the table. Because, it reads only those blocks which contains these 5 columns. In contrast, a row-wise storage database would read the blocks that contain the 95 unneeded columns as well.

So, this approach makes column-oriented databases a better choice than row-oriented databases for data warehousing.

Now, let's look at the key benefits of using Amazon Redshift for building a data warehouse:

Amazon Web Services (AWS) is a secure cloud services platform. It offers many services like compute services, storage services, database services, networking services and other services. Amazon Redshift is one of the database service from AWS.

As discussed earlier, Amazon Redshift is a massively parallel columnar data warehouse service. An MPP architecture allows you to use all of the resources available in the cluster for processing data, thereby dramatically increasing performance of petabyte-scale data warehouses. MPP data warehouses allow you improve performance by simply adding more nodes to the cluster.

As a columnar MPP technology, Amazon Redshift offers key benefits for performance, cost-effective data warehousing including efficient compression, reduced I/O, and lower storage requirements. It is based on ANSI SQL, so you can run existing queries with little or no modification. As a result, it has become a popular choice for enterprise data warehouses and data marts today.

Architecture and capabilities

Note: You can save up to 75% over on-demand rates by committing to use Amazon Redshift for a 1 or 3 year term.

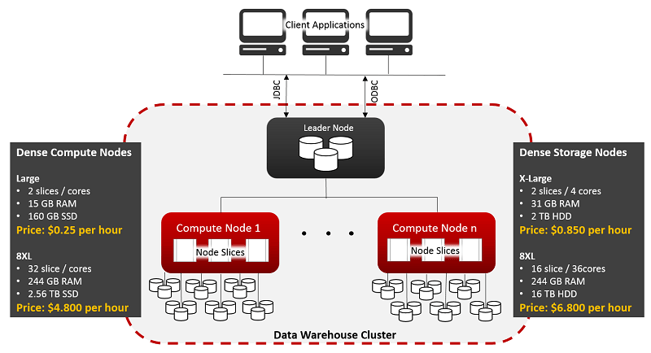

Cluster is the main configuration of Amazon Redshift. It comprises of one leader node and set of compute nodes. Leader node manages the communication between client applications and compute nodes. Amazon Redshift has custom Java Database Connectivity (JDBC) and Open Database Connectivity (ODBC) drivers that you can download from the Amazon Redshift console, using which you can connect from client applications.

Leader node compiles the code and assigns the compiled code to individual compute nodes in the cluster. The compute nodes execute the compiled code send intermediate results back to the leader node for final aggregation. Each compute node has its own CPU, memory, and attached disk storage. Each compute node is further partitioned into slices. Which process the portion of the work load assign to the compute node.

Amazon Redshift offers two types of compute nodes:

- Dense compute nodes (more processing power and less storage power)

- Dense storage nodes (more storage power and less processing power)

Fast

Amazon Redshift uses columnar storage, data compression, and zone maps to reduce the amount of I/O needed to perform queries. Interleaved sorting enables fast performance without the overhead of maintaining indexes.

Amazon Redshift employs an MPP architecture to take advantage of all available resources by parallelizing and distributing SQL operations. The underlying hardware is designed for high performance data processing, using local attached storage to maximize throughput between the CPUs and drives, and a 10 GigE mesh network to maximize throughput between nodes.

Inexpensive

Amazon Redshift requires no long-term commitments or upfront costs. This pricing approach frees you from the capital expense and complexity of planning and purchasing data warehouse capacity ahead of your needs. Charges are based on the size and number of nodes in your cluster.

There is no additional charge for backup storage up to 100 percent of your provisioned storage. For example, if you have an active cluster with two XL nodes for a total of 4 TB of storage, AWS provides up to 4 TB of backup storage on Amazon S3 at no additional charge. Backup storage beyond the provisioned storage size, and backups stored after your cluster is terminated, are billed at standard Amazon S3 rates. There is no data transfer charge for communication between Amazon S3 and Amazon Redshift.

Fully managed

Amazon Redshift automatically detects and replaces any failed node in your data warehouse cluster. It makes your replacement node available immediately and loads your most frequently accessed data first so that you can resume querying your data as quickly as possible. Because Amazon Redshift mirrors your data across your cluster, it uses the data from another node to rebuild the failed node. The cluster is in read-only mode until a replacement node is provisioned and added to the cluster, which typically takes only a few minutes.

Scalable

With a few clicks in the console you can easily change the number and type of nodes in your data warehouse as your performance or capacity needs change. Amazon Redshift enables you to start with as little as a single 160 GB node and scale up all the way to a petabyte or more of compressed user data using many nodes.

While resizing, Amazon Redshift places your existing cluster into read-only mode, provisions a new cluster of your chosen size, and then copies data from your old cluster to your new one in parallel. During this process, you pay only for the active Amazon Redshift cluster. You can continue running queries against your old cluster while the new one is being provisioned. After your data has been copied to your new cluster, Amazon Redshift automatically redirects queries to your new cluster and removes the old cluster.

Security is built-in

To help provide data security, you can run Amazon Redshift inside a virtual private cloud based on the Amazon Virtual Private Cloud (Amazon VPC) service. You can use the software-defined networking model of the VPC to define firewall rules that restrict traffic based on the rules you configure. Amazon Redshift supports SSL-enabled connections between your client application and your Amazon Redshift data warehouse cluster, which enables data to be encrypted in transit.

The Amazon Redshift compute nodes store your data, but the data can be accessed only from the cluster's leader node. This isolation provides another layer of security.

Amazon Redshift is not ideally suited for the following usage patterns:

Small datasets Amazon Redshift is built for parallel processing across a cluster. If your dataset is less than 100 gigabytes, you're not going to get all the benefits that Amazon Redshift has to offer and Amazon RDS may be a better solution.

OLTP Amazon Redshift is designed for data warehousing workloads delivering extremely fast and inexpensive analytic capabilities. If you require a fast transactional system, you might want to choose a traditional relational database system built on Amazon RDS or a NoSQL database such as Amazon DynamoDB.

Unstructured data Data in Amazon Redshift must be structured by a defined schema. Amazon Redshift doesn't support an arbitrary schema structure for each row. If your data is unstructured, you can perform extract, transform, and load (ETL) on Amazon EMR to get the data ready for loading into Amazon Redshift. For JSON data, you can store key value pairs and use the native JSON functions in your queries.

BLOB data If you plan on storing binary large object (BLOB) files such as digital video, images, or music, you might want to consider storing the data in Amazon S3 and referencing its location in Amazon Redshift. In this scenario, Amazon Redshift keeps track of metadata (such as item name, size, date created, owner, location, and so on) about your binary objects, but the large objects themselves are stored in Amazon S3.

Conclusion

Overall, Amazon Redshift has definite benefits and there are many reasons to try it out for building a data warehouse.

- High speed redundant direct connect lines

- Load billions of rows in minutes

- All data in private VPC

- All data encrypted with private on-premises hardware keys

- Encryption of data, transport, backups, partial spills

- Audit of all SQL actions

- Audit of all configuration changes

Check out our recent customer success story to see how we've helped our client:

Cigna

Do you enjoy the solving technology problems and helping people meet their data analytics challenges? Maybe you would be a good fit for our team. See our job openings.

Balaswamy is a senior consultant with expertise in Informatica, Oracle Data Integrator, Amazon Web Services, and UNIX shell scripting and has worked extensively on global supply chain systems.

Comments

Comments not added yet!